“We should have some ways of connecting programs like garden hose—screw in another segment when it becomes necessary to massage data in another way.”

With this quote, Douglas McIlroy describes in one sentence the Unix pipes he invented in 1972. And this is exactly the concept underlying streams in Node.js. In this way, the native streams of Node.js work in a very similar way to the observables in Reactive Extensions for JavaScript (RxJS).

What Is a Stream?

With both Unix pipes and streams, you have a data source to which you can connect any number of modules, ultimately directing the data stream to a specific destination. What may sound a bit abstract at first glance can be illustrated quite clearly on the basis of Unix pipes. In the listing below, the ls command, which creates a list of files and directories pertaining to the specified directory, serves as the data source. The output of this command is passed as input into the grep command, which is used to display only entries that match a specific pattern. This filtered list is finally the input for the text viewer less. Applied to Node.js, the three commands each represent a stream, and the output of each stream is associated with the input of the subsequent stream.

$ ls -l /usr/local/lib/node_modules | grep 'js' | less

Stream Usages

Simply put, streams are used to handle input and output. In this context, both are considered as a continuous data stream. The defined interface on which all streams are based allows you to combine streams very flexibly. This, in turn, corresponds to the modular concept of Node.js. Each module—each individual stream implementation in this case—is supposed to perform only one specific task and leave everything else to the next stream in the chain.

Streams can therefore be used wherever you’re dealing with a continuous flow of data, that is, almost anywhere. You can see this when you take a closer look at the Node.js platform. Almost all important modules implement the stream API. Both the file system module and the web server have data streams, and most database drivers also provide you with the ability to stream information out of or into the database.

Streams show their strength, especially when it comes to manipulating data streams. As an example, let’s suppose you have a database from which you read information. That would be the first stream in the chain. The second stream is responsible for transforming the information in such a way that your application can process it further. In a third step, you can then encrypt the information and finally send it in the last stream to another computer through a Transmission Control Protocol (TCP) connection. The advantage at this point is that you don’t use the entire contents of the database at once, but always small chunks, which significantly reduces the memory utilization of your application.

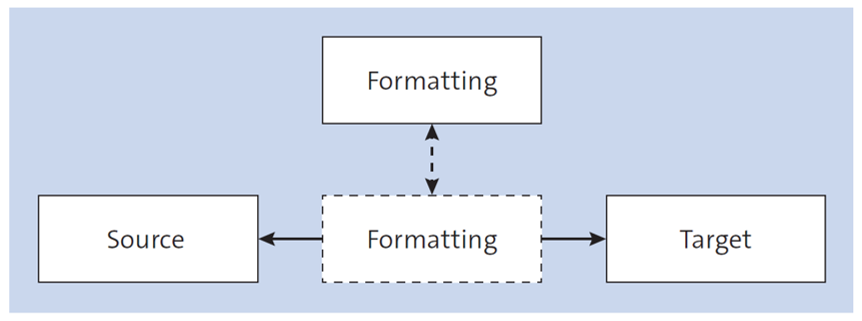

Now, if the way you need to put the data into shape changes, all you have to do is implement that and replace the second stream in the chain. All other stream modules remain unchanged. The figure below illustrates this.

To enable you to build such a pipeline of individual streams, several types of streams are available for use at different points in a chain.

Available Streams

Node.js provides several types of streams. Each stream serves a specific purpose:

- Readable streams: A stream of this class represents a data source that can be read from. A typical representative is the request object of the HTTP server, which represents an incoming request.

- Writable streams: A writable stream accepts data and thus forms the end of a stream chain. Analogous to the request object, the response object of the HTTP server is a writable stream.

- Duplex streams: This stream type is both readable and writable. You can use a duplex stream as a step between a readable stream and a writable stream. In most cases, however, you’ll use transform streams.

- Transform streams: Data can be both written to and read from this type of stream. As the name suggests, transform streams are used when the goal is to create a specific output stream from an input stream. Transform streams are based on duplex streams, but their API is simpler.

If you work with Node.js streams, then sooner or later, you’ll come across the different versions of the stream API.

Stream Versions in Node.js

During the development process of the Node.js platform, the appearance and also the use of the streams have changed. There are now three different versions available, each with its own characteristics:

- Version 1 (push streams): Streams have been around in Node.js since the beginning. In this original version, the data source controlled the data flow. The data was written to the stream, and the next stage in the chain had to make sure that it could receive the data accordingly.

- Version 2 (pull streams): The developers recognized the problems with the lack of control of subsequent stages in a stream chain in the first version of the stream API and developed a concept referred to as pull stream. Here, the subsequent stages in the stream chain are able to actively fetch the data from the preceding stages. This change made its way into the platform with version 0.10 of Node.js.

- Version 3 (push and pull streams): Until the release of the third version of the stream API, it wasn’t possible to run a stream in both pull and push modes. With io.js and Node.js in version 0.11, this problem was addressed. Today, you can use a stream in both variants without having to generate a new instance.

Normally, you’ll use the third version of the stream API, which allows for flexible switching between versions. The pull variant is used by default.

Streams Are EventEmitters

Streams are asynchronous, which means you can’t easily follow a clear flow in your source code but have to use callback functions. For this to work, a stream is based on an EventEmitter instance.

For you as a developer, this means that all actions in a stream are represented by events to which you can bind callback functions. This also means you can bind multiple callback functions to a single stream event, which gives you a greater degree of flexibility.

Thus, when implementing the stream API, Node.js again follows the familiar pattern of reuse. You can bind callbacks as usual via the on method, trigger events using the emit method, remove event listeners, or bind callbacks to an event only once.

With this information at hand, you can now move on to using the stream API. First, you’ll learn more about the readable streams of Node.js.

Editor’s note: This post has been adapted from a section of the book Node.js: The Comprehensive Guide by Sebastian Springer. Sebastian is a JavaScript engineer at MaibornWolff. In addition to developing and designing both client-side and server-side JavaScript applications, he focuses on imparting knowledge. As a lecturer for JavaScript, a speaker at numerous conferences, and an author, he inspires enthusiasm for professional development with JavaScript. Sebastian was previously a team leader at Mayflower GmbH, one of the premier web development agencies in Germany. He was responsible for project and team management, architecture, and customer care for companies such as Nintendo Europe, Siemens, and others.

This post was originally published 4/2024.

Comments